Text Segmentation Techniques for RAG: How to Enhance Retrieval-Augmented Generation Precision

Share

Text segmentation (chunking) is a critical component in Retrieval-Augmented Generation (RAG) systems, directly affecting retrieval quality and the accuracy of generated results. Choosing appropriate segmentation strategies not only improves retrieval precision but also reduces hallucinations, providing users with more accurate answers. This article explores text segmentation strategies in RAG and their impact on system performance.

Why is Text Segmentation Critical for RAG?

Text segmentation is the process of breaking large documents into smaller, manageable chunks that are then indexed and provided to language models during the retrieval phase. Efficient segmentation is crucial for RAG systems for several reasons:

- Overcoming token limitations: Large language models like GPT or Llama have token constraints. Segmentation ensures each unit fits within the model's processing window, preventing information truncation.

- Improving retrieval accuracy: Well-segmented documents maintain the integrity of relevant information, reduce noise, improve retrieval precision, and enable models to find the most relevant content.

- Preserving semantic integrity: Intelligent segmentation ensures semantic units remain intact, preventing sentences, paragraphs, or ideas from being arbitrarily cut off, allowing language models to generate coherent and accurate responses.

- Optimizing processing efficiency: Segmentation reduces computational load, enabling retrieval and generation components to work more efficiently, even with massive datasets.

- Enhancing user experience: Accurate segmentation allows RAG systems to provide more relevant and precise answers, improving user satisfaction.

Comparison of Major Segmentation Strategies

1. Fixed-Size Chunking

How it works: Divides text evenly based on predefined size (characters, words, or tokens).

Advantages:

- Simple implementation, no complex algorithms, low computational requirements

- Consistent processing results, easy to manage and index

- Suitable for large-scale data processing

Disadvantages:

- May cut through sentences or paragraphs, disrupting semantic integrity

- May include irrelevant information, reducing retrieval accuracy

- Doesn't consider document structure, lacks flexibility for different text types

Use cases: Uniformly structured content like encyclopedias; rapid prototyping; resource-constrained environments.

2. Sentence-Based Chunking

How it works: Splits text at natural sentence boundaries, ensuring each chunk contains complete thoughts.

Advantages:

- Preserves semantic flow, prevents sentence interruption

- Improves retrieval relevance, produces more meaningful matches

- Flexibility to adjust the number of sentences per chunk for different use cases

Disadvantages:

- Sentence length variations lead to uneven chunk sizes, potentially affecting efficiency

- Sentence detection is challenging for poorly formatted or unstructured text

Use cases: Documents with clear sentence boundaries like news articles; applications where semantic preservation is crucial; semi-structured content.

3. Paragraph-Based Chunking

How it works: Divides text at paragraph boundaries, preserving the document's logical structure and flow.

Advantages:

- Maintains the document's original logic, preserving paragraph integrity

- Relatively simple implementation, especially for structured documents

- Provides more complete context for deeper understanding

Disadvantages:

- Significant paragraph length variations may lead to uneven chunk sizes

- Long paragraphs might exceed language model token limits

- Depends on the quality of the original document's paragraphing

Use cases: Well-formatted documents like papers and reports; tasks requiring broad context; documents with clear hierarchical structure.

4. Semantic Chunking

How it works: Determines chunk boundaries by analyzing semantic relationships between sentences, ensuring each chunk maintains thematic consistency.

Advantages:

- Maintains semantic integrity with coherent information in each chunk

- Improves retrieval quality by reducing irrelevant information interference

- Adapts to various document types and structures, offering strong flexibility

Disadvantages:

- Complex implementation requiring advanced NLP techniques

- High computational resource requirements, slower processing for large documents

- Requires careful tuning of similarity thresholds

Use cases: Complex or unstructured documents; topic-specific retrieval; large knowledge base organization.

5. Recursive Chunking

How it works: Takes a hierarchical approach to document segmentation, starting with large chunks and recursively breaking them down into smaller units until size requirements are met.

Advantages:

- Preserves document hierarchical structure

- Balances context with token limitations

- Flexibly adapts to different document types

Disadvantages:

- High implementation complexity

- Potentially uneven chunk sizes

- Resource-intensive processing

Use cases: Documents with clear structure like textbooks and technical manuals; models with strict token limitations; content with hierarchical organization.

6. Sliding Window Chunking

How it works: Maintains overlap between segments to reduce information loss at boundaries.

Advantages:

- Maintains contextual continuity, reducing information fragmentation at boundaries

- Improves retrieval completeness, avoiding missing cross-segment information

- Can be combined with other segmentation methods

Disadvantages:

- Creates content duplication, increasing storage and retrieval overhead

- May result in similar repetitive paragraphs in retrieval results

- Requires processing overlapping content during generation to avoid repetition

Use cases: Content requiring high contextual continuity; complex concept explanations; lengthy detailed explanations.

7. LLM-Assisted Chunking

How it works: Utilizes large language models to analyze text and suggest segmentation boundaries based on content structure and semantics.

Advantages:

- Extremely high precision, understanding deep semantic relationships

- Adaptively handles diverse content

- Can be customized with human stylistic preferences

Disadvantages:

- High computational cost, requiring API calls

- Slow processing speed, unsuitable for real-time applications

- Depends on LLM quality, results may be inconsistent

Use cases: High-value content; scenarios demanding extremely high retrieval precision; complex specialized domain documents.

Advanced Segmentation Techniques for Improving RAG Systems

Beyond basic segmentation strategies, the following advanced methods can further enhance RAG system performance:

Topic Shift Detection Based on Sentence Embedding Similarity

By calculating embedding vector similarity between adjacent sentences, automatically identify topic shift points as segmentation boundaries:

from sentence_transformers import SentenceTransformer, util

# Load pre-trained model

model = SentenceTransformer('all-MiniLM-L6-v2')

sentences = text.split(".")

embeddings = model.encode(sentences)

threshold = 0.75

chunks = []

current_chunk = [sentences[0]]

for i in range(1, len(sentences)):

sim = util.cos_sim(embeddings[i], embeddings[i-1])

if sim < threshold: # Similarity below threshold indicates topic change

chunks.append(".".join(current_chunk) + ".")

current_chunk = [sentences[i]]

else:

current_chunk.append(sentences[i])

This method accurately captures semantic transitions, particularly suitable for documents with rich topic variations, though it increases computational overhead.

FAQ Structure Recognition and Q&A Matching

For customer service knowledge bases and other common FAQ-formatted documents, regular expressions can identify Q&A structures:

import re

pattern = r"(Q[::]?.+?)(?=Q[::]|\Z)" # Match Q&A groups starting with Q

qa_chunks = [m.strip() for m in re.findall(pattern, text, flags=re.S)]

This method is especially suitable for highly structured customer service documents, precisely capturing each question as a core unit, improving retrieval accuracy.

Optimal Segmentation Methods for Customer Service Scenarios

In customer service knowledge base scenarios, text segmentation choice directly impacts intelligent customer service answer quality and user satisfaction. Customer service knowledge bases typically have unique characteristics: clear question-answer format, highly targeted content, and user queries usually pointing to specific issues. Based on these characteristics, here are the optimal segmentation strategies for customer service scenarios:

Question-Answer Pair Based Segmentation Strategy

Customer service knowledge bases are typically organized in FAQ or Q&A pair format. In this scenario, treating each Q&A pair as an independent text segment is the ideal segmentation method. Specifically, dividing the knowledge base according to each common question and its answer ensures each chunk corresponds to a complete Q&A pair.

This question-intent-based segmentation approach offers significant advantages:

- Higher retrieval precision: Each segment focuses on a specific question, allowing user queries to match relevant Q&A segments more accurately without mixing in irrelevant information. For example, when a user asks "How to change my email address," the system can directly match the chunk specifically addressing this question rather than a large text block containing multiple mixed questions.

- Complete context: Each chunk contains the full context needed to answer a particular question, without missing critical information due to excessive segmentation. This avoids situations where the model needs to piece together answers from multiple fragments, improving answer accuracy and fluency.

- Reduced repetition and interference: Users typically focus on one question per inquiry. After segmenting by Q&A pairs, the retrieval usually returns the corresponding FAQ answer without introducing interference from other Q&As, allowing the LLM to generate responses more focused on relevant content.

For example, if the knowledge base originally combined multiple Q&As into one segment, when a user queries "How do I change my email address?" the retrieved fragment might include another unrelated Q&A, adding noise; after segmentation optimization, the retrieval result will only contain content related to "changing email address"

The left image shows pre-optimization retrieved fragments containing two mixed Q&As, while the right image shows post-optimization retrieved fragments containing only the required Q&A, greatly enhancing matching precision.

Of course, in practical applications, chunk size should not be too large. For very long answers (such as multi-step operation instructions), they can be further broken down into key step sequences while maintaining semantic integrity, using sliding windows to maintain continuity. Overall, FAQ Q&A-based segmentation fits customer service scenarios perfectly: extensive practice shows that for tasks requiring fine-grained information retrieval (such as customer service Q&A), using fine-grained small chunks (such as single Q&As as chunks) typically achieves better results.

Implementing Efficient Customer Service Knowledge Bases with MaxKB

MaxKB is a ready-to-use, flexible RAG chatbot based on Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG), applicable to intelligent customer service, enterprise internal knowledge bases, academic research, and education scenarios. Unlike previously described "professional knowledge base tools," MaxKB is actually a complete RAG application system, providing full-process support from knowledge management to application deployment.

MaxKB has the following core features:

- Ready-to-use: Supports direct document uploads/automatic crawling of online documents, with automatic text segmentation, vectorization, and RAG (Retrieval-Augmented Generation) capabilities. This effectively reduces LLM hallucinations, providing higher-quality intelligent Q&A interaction experiences.

- Flexible orchestration: Equipped with powerful workflow engines and function libraries, supporting AI process orchestration to meet complex business scenario requirements.

- Seamless integration: Supports zero-code rapid integration into third-party business systems, quickly empowering existing systems with intelligent Q&A capabilities and improving user satisfaction.

- Model agnostic: Supports various large models, including private models (such as DeepSeek, Llama, Qwen, etc.) and public models (such as OpenAI, Claude, Gemini, etc.).

1. MaxKB Knowledge Base Segmentation Features

MaxKB supports two segmentation methods: Smart Segmentation and Advanced Segmentation.

Smart Segmentation:

- Markdown files automatically segmented by hierarchical headings (up to 6 levels), maximum 4096 characters per segment

- HTML and DOCX files recognize heading formats and segment by hierarchy

- TXT and PDF files segment by title markers, or by 4096 characters without markers

Advanced Segmentation:

- Supports custom segmentation separators (including various heading levels, blank lines, line breaks, spaces, punctuation, etc.)

- Supports setting individual segment length (50-4096 characters)

- Provides automatic cleanup functionality, removing redundant symbols

When importing documents, MaxKB offers the option to "Set titles as related questions." When checked, the system automatically sets all segment titles as related questions for that segment, improving matching precision.

After segmentation, you can preview segments and manually adjust any unreasonable segmentation:

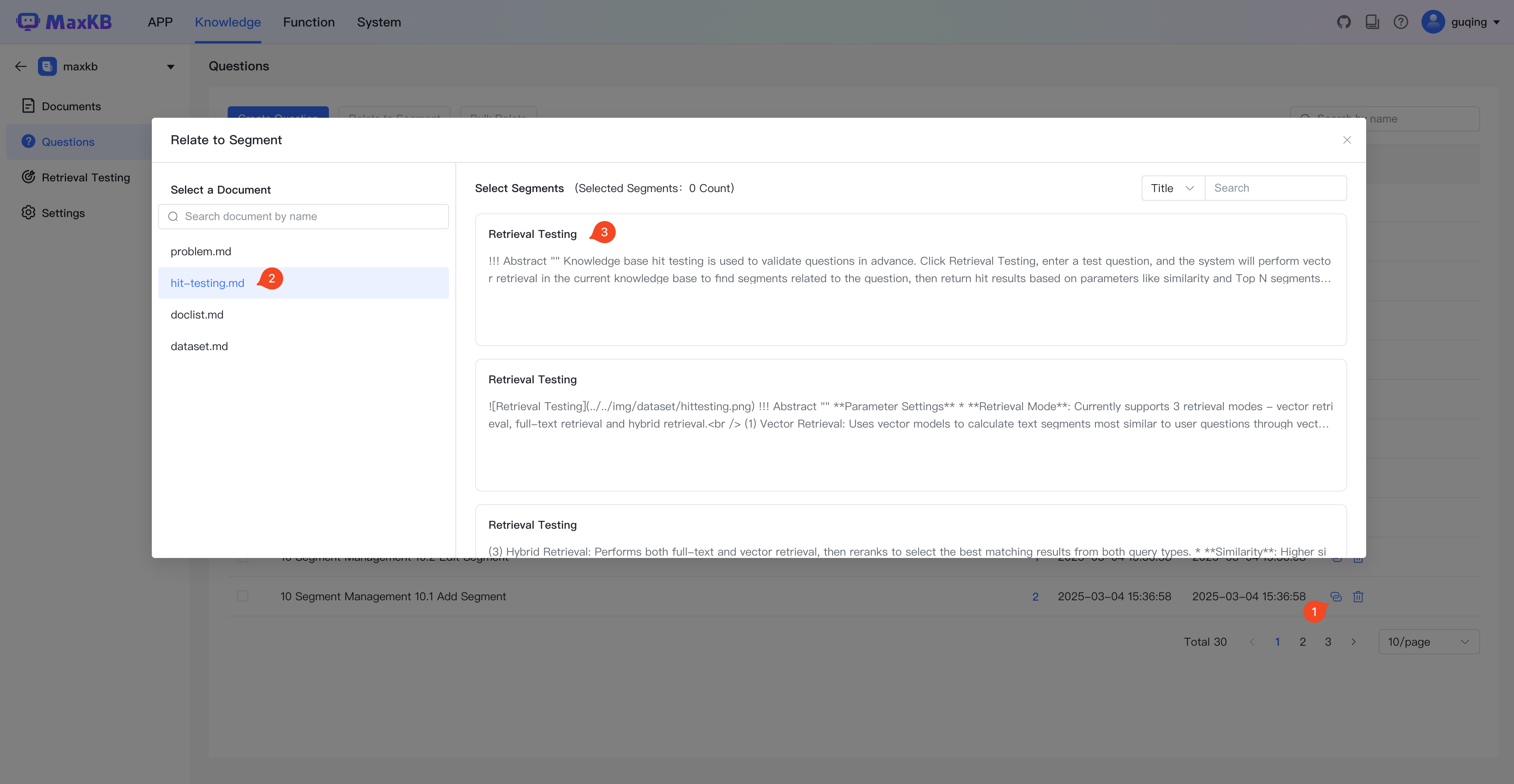

2. MaxKB Q&A Pair Management

In MaxKB, instead of using code, Q&A pair associations are managed through an intuitive user interface. The system provides multiple ways to set up and manage Q&A associations:

-

Segment Management Interface:

- Click on a document in the document list to enter the document segment management page

- Click on the segment panel to edit segment information and related questions in the segment details page

- When adding a segment, you can fill in the segment title, segment content (supporting markdown style editing), and related questions

-

Question Management:

- In the knowledge base management interface, you can create questions and associate them with document segments

- Click the "Create Question" button and enter questions line by line

- After adding questions, you can associate them with segments in the document; when users ask questions, the system will prioritize matching the question database to query associated segments

This user interface-based question management approach makes it easy for non-technical personnel to set up and optimize knowledge base Q&A pairing without writing code. MaxKB recommends setting related questions for segments so the system will prioritize matching related questions before mapping to segment content, improving matching efficiency and accuracy.

3. Document Processing Strategies

MaxKB supports multiple ways to process document hits:

- Model Optimization: When document segments are matched, prompts are generated according to application templates and sent to the model for optimization before returning

- Direct Answer: When document segments are matched and similarity meets the threshold, segment content is returned directly, suitable for scenarios requiring images, links, and other information

4. Automatic Question Generation

MaxKB also supports automatic question generation. By selecting files and clicking the "Generate Questions" button, AI models summarize file content to generate corresponding questions and automatically associate them, eliminating the need to manually write questions.

Practical Recommendations

- Organize based on question intent: Group content with similar question intent in the same chunk, even if expressed differently.

- Consider answer length variations: FAQ answer lengths can vary greatly. For extremely long answers (like detailed tutorials), further break them down into step sequences while maintaining semantic integrity.

- Preserve question context: Ensure each chunk contains the complete question so retrieval results can directly present the question-answer relationship.

- Adjust granularity dynamically: Simple questions (like "What are your business hours?") can use small chunks; complex questions (like "How do I request a refund?") require larger chunks that preserve complete process instructions.

- Periodic evaluation and optimization: Regularly analyze actual user consultations and retrieval data to identify which questions have poor retrieval performance and adjust segmentation strategies accordingly.

Segmentation Strategy Selection Reference Table

| Method | Precision | Speed | Implementation Complexity | Suitable Scenarios |

|---|---|---|---|---|

| Fixed-size chunking | ★★☆☆☆ | ★★★★★ | ★☆☆☆☆ | Simple tests, ambiguously structured text |

| Sentence-based | ★★★☆☆ | ★★★★☆ | ★★☆☆☆ | News articles, coherent narratives |

| Paragraph-based | ★★★☆☆ | ★★★★☆ | ★★☆☆☆ | Structured documents, academic papers |

| Semantic chunking | ★★★★☆ | ★★☆☆☆ | ★★★☆☆ | Complex documents, multi-topic content |

| Recursive chunking | ★★★★☆ | ★★★☆☆ | ★★★☆☆ | Hierarchical documents, technical manuals |

| FAQ recognition | ★★★★★ | ★★★★★ | ★★☆☆☆ | FAQ knowledge bases, customer service |

| LLM-assisted chunking | ★★★★★ | ★☆☆☆☆ | ★★★★☆ | High-value content, specialized domains |

Conclusion

Text segmentation, as a foundational component of RAG systems, is crucial for improving retrieval precision and generation quality. By selecting appropriate segmentation strategies and continuously optimizing them, RAG system performance can be significantly enhanced. Particularly in customer service scenarios, Q&A pair-based segmentation strategies can improve retrieval accuracy by 30%-50%, substantially reducing irrelevant information interference and providing users with more direct and precise answers.

Best practice is to select or combine different segmentation methods based on specific application scenarios and document characteristics, and continuously adjust and optimize through experimentation to find the optimal configuration balancing semantic integrity, retrieval precision, and computational efficiency.

As RAG technology continues to evolve, more intelligent segmentation methods will emerge, further improving the precision and application effectiveness of retrieval-augmented generation. Developers should closely monitor innovations in this field, continuously test and adopt new methods to build more powerful and accurate RAG systems.